Nov 14, 2025·Average IQ & Demographics

What is the Average IQ in the USA?

What is the average IQ in the US? It's 100 by design, with 68% of Americans scoring 85-115. Learn what's the average IQ in the United States.

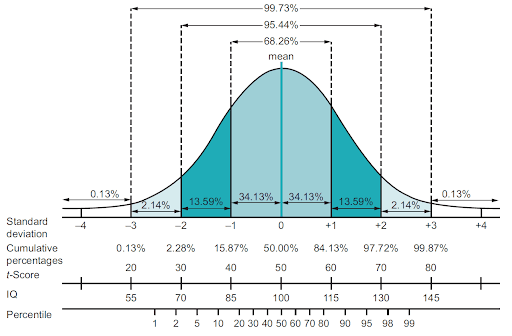

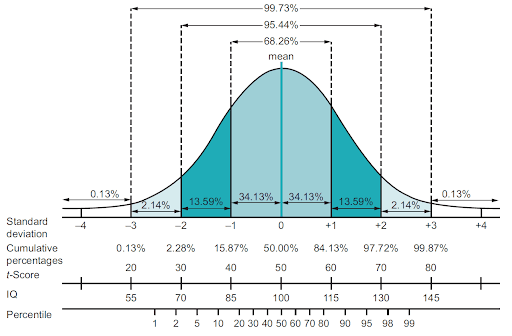

The average IQ in the United States is 100. This is by design. IQ tests are specifically created so that the average score is always 100, with a standard deviation of 15 points. This means that about 68% of Americans have IQ scores between 85 and 115, and about 95% score between 70 and 130.

Tests designed for other countries are scaled so that the average in those countries is 100. There have been attempts to compare average IQs for different countries on the same IQ scale, but this is more complicated than it appears.

How the Average IQ is Maintained

IQ test creators use a process called "norming" to ensure that 100 remains the average score. When a new IQ test is developed, it is administered to a large, representative sample of the population, called a norm sample. The test creators then use this group's performance to set the scoring system. If the test is designed for American test takers, then the average performance of Americans becomes the benchmark for an IQ of 100.

Professional IQ tests are typically renormed every 10-15 years to account for changes in the population's performance over time. Without this regular updating, the average score would drift away from 100. Learn about IQ Scores and Differences Between Groups here.

Why 100 is Always Average

The number 100 is essentially arbitrary; test creators could have chosen any number to represent average intelligence. However, 100 has been the standard since German psychologist Wilhelm Stern proposed the original "intelligence quotient" formula in the early 1900s. Even though modern IQ tests no longer use Stern's quotient formula (they use deviation scores instead), the tradition of setting the average at 100 has persisted because it makes scores easy to interpret across different tests and time periods.

Does the Average IQ Change Over Time?

Raw performance on IQ tests has actually increased throughout the 20th century, a phenomenon known as the Flynn effect. Research shows that average test performance rose by about 3 points per decade during much of the 20th century. However, because tests are regularly renormed, the average always gets reset to 100.

The Flynn effect reflects improvements in test-taking skills, education, and familiarity with abstract reasoning rather than actual increases in general intelligence. If tests weren't updated periodically, the average IQ would gradually increase. The increase in scores is not uniform across all tasks that appear on IQ tests, though. In fact, it declines for some. But as long as the average task performance increases, IQ will increase. An increasing IQ is not the only consequence of the Flynn effect. Because people become more or less adept at the tasks on the tests, test scores are not comparable from one time period to another. That is why the increase in IQ caused by the Flynn effect does not represent a real increase in intelligence.

What Does "Average" Really Mean?

An IQ score tells you how well someone performed compared to others in their age group. It's a relative measure, not an absolute one. An IQ of 100 means the test taker scored better than 50% of people and worse than 50% of people. An IQ of 115 (one standard deviation above average) means the examinee scored better than about 84% of people, while an IQ of 85 means he or she scored better than only about 16%.

How Age Affects Average IQ

While the overall average IQ in the USA is 100, certain cognitive abilities change with age. Processing speed tends to decline in late adulthood, while vocabulary and learned knowledge remain stable or even increase until very late in life. Professional IQ tests account for these changes by comparing examinees only to others in their age group. A 70-year-old with an IQ of 100 has performed as well as the average 70-year-old, even though their raw scores on certain subtests might differ from a 30-year-old with the same IQ.

Article Categories

All ArticlesUnderstanding IQ ScoresTaking an IQ TestRIOT-Specific InformationGeneral IQ & IntelligenceAdvanced Topics & ResearchIQ Scores & InterpretationMensa & High-IQ SocietiesOnline IQ Tests IQ Test Basics & FundamentalsAverage IQ & DemographicsFamous People & IQHistory & Origins Of IQ TestingAccuracy, Reliability & CriticismSpecial Population & Related ConditionsImproving IQ / PreparationSpecific IQ Tests & FormatsIQ Testing for HR & Recruitment

Related Articles

Take our IQ testsCompare all tests

Basic IQ Test

5 subtests + 5 cognitive abilities

Features

- ~13 Minutes

- IQ score

- Cognitive abilities breakdown

- ±5.6 IQ margin of error

5/15 Subtests

Learn moreVocabulary

Matrix Reasoning

SToVeS

Visual Reversal

Symbol Search

Full IQ Test

15 subtests + all cognitive abilities

Features

- ~52 Minutes

- IQ score

- Cognitive abilities breakdown

- ±3.7 IQ margin of error

15/15 Subtests

Learn moreVocabulary, Information, Analogies

Matrix Reasoning, Visual Puzzles, Figure Weights

Object Rotation, SToVeS, Spatial Orientation

Computation Span, Exposure Memory, Visual Reversal

Symbol Search, Abstract Matching

Simple Reaction Time, Choice Reaction Time

Community

Intelligence Journals & Organizations

News & Press

Our Articles

Our Articles

Our Articles

Our Articles

Our Articles